How to Easily Make Local Copy of a Website

There are occasions where you sometimes want to copy an entire website onto your local computer. The range of difficulty for this varies from website to website. How much of it is backend-dependent? Do you have FTP access? Are paths relative or absolute? Etc, etc. Assuming you have absolutely no support from the website owner, then do not worry! You can still make a primitive backup pretty easily that can be navigated locally on your machine as an archive.

There are occasions where you sometimes want to copy an entire website onto your local computer. The range of difficulty for this varies from website to website. How much of it is backend-dependent? Do you have FTP access? Are paths relative or absolute? Etc, etc. Assuming you have absolutely no support from the website owner, then do not worry! You can still make a primitive backup pretty easily that can be navigated locally on your machine as an archive.

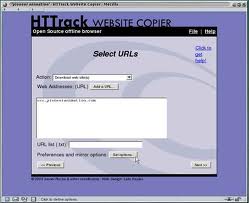

That’s where some nifty piece of software called HTTrack Website Copier comes in. It will crawl the supplied website and make a copy of every single page that it can find — images included. And the best part? It’s free!

In cases where clients are about to launch a new version of their website and want a local version of the old one that can easily be navigated, this software is king.

(Note: There is also the infamous wget, which works incredibly well. It may be more difficult to use for some people, however.)